This is an R & D project in the research area "Human-Information Technology Ecosystem," which is delivered by the Research Institute of Science and Technology for Society (RISTEX) at Japan Science and Technology Agency (JST). In recent years, AI technology has been empowering artificial systems and robots with a certain kind of autonomy. Such quasi-autonomous systems may behave in ways beyond their designers’ expectations, just like children starting to become independent from their parents. However, under the current legal system, the designers or users may incur almost unlimited legal liability, which may chill the development of potentially beneficial science and technology. In this project, we assume three stages of the degree of autonomy of artificial systems, based on the extent of their ability to set their object and to modify it by themselves, and the level of complexity of their computation. Then, we will devise legal models corresponding to the three stages, through analyzing the legal doctrines of judicial person and the history of the concept persona. Furthermore, having identified the legal problems of the current conceptions of liability, especially in criminal law, we will then propose a new concept of legal liability for artificial systems as “Legal Beings.” We will also propose, through mock trials using an android, legal provisions to realize the “NAJIMI” society. This project may contribute to better understanding the concept of autonomy and offer some preferable models of artificial systems.

Project Vision

Studies on science and technology law typically focus on how to regulate the development of a new technology and how to hold its stakeholders liable for the possible damage it causes. However, recent autonomous artificial intelligence is showing the limits of such conventional legal methods. For example, for an artificial system with high autonomy that adaptively communicates with humans and modifies its behavioral rules, neither the designer nor user can be considered fully in “control.” Thus, the conventional methods that place responsibility on the only people involved might not be suitable to manage the research and development properly. In dealing with this issue, the question of whether “legal personhood” status can be extended to artificial systems has been the focus of numerous studies. It has been widely debated whether to create a new category of legal personhood applicable to artificial systems and robots. However, current studies of the legal personhoods of artificial systems and robots pay little attention to the conception of legal personality, although, historically, the nature of legal personality has provoked extensive disagreement. Rather than superficial consideration of regulatory methods, it is now necessary to fundamentally reconsider traditional legal principles. Our project may offer this by presenting an alternative view to the old legal dogma recognizing only humans as legal persons and legally liable agents. It may, thereby, stimulate debate on the desired legal and social status of artificial systems. A critical analysis of the concept of subject, which assumes only humans as subjects and non-humans as tools, provides a useful tool for estimating the problems of the old legal dogma. We then consider how we can modify it to render artificial systems “Legal Beings,” the status of which can make them legally liable. To identify some necessary conditions for artificial systems to become legal beings, members of this project, comprising jurists and engineers, collaborate and interact with each other. Our final goal is to prepare for the realization of intelligent and autonomous systems in a future NAJIMI society.

What is the "NAJIMI" Society?

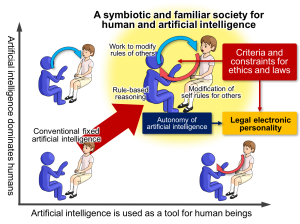

In the “NAJIMI” (なじみ in Japanese) society, “artificial systems and humans harmonize (adapt with each other)." The NAJIMI society contrasts with two extreme situations: (1) "a society where artificial intelligence dominates humans"; and (2) "a society where humans use artificial intelligence merely as a tool." Regarding (1), due to the recent rise of artificial intelligence, typified by deep learning, it is warned that not only blue collar but also white-collar jobs requiring high levels of expertise, such as legal practice and scientific research, will be threatened by artificial intelligence (Martin Ford, Rise of the Robots, 2015). Some authors who raise fears over artificial intelligence often describe a world in which, through infinite expansion, artificial intelligence develops omniscient ability. In such a world, forms of artificial intelligence communicate only with one another, rendering communication between humans and artificial intelligence unnecessary. This is society (1). Among those who dislike this scenario, society (2) seems to be exceedingly idealized .

Differing from both society (1) and (2), in the NAJIMI society, artificial intelligence not only modifies its behavioral rules but also advises humans to change their rules through communication with them. If both artificial intelligence and humans interactively create social rules in this way, it seems that artificial intelligence neither becomes a tool of humans nor excludes or controls them.

Groups

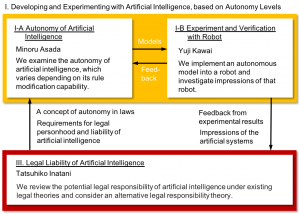

I. Developing and Experimenting with Artificial Intelligence, based on Autonomy Levels

I-A. Autonomy of Artificial Intelligence (Minoru Asada, Osaka University)

We examine the autonomy of artificial intelligence, which varies depending on its rule modification capability. These levels range from fixed rules, which are preprogrammed by the designer, to adaptive rules, which are learned through interaction with humans. We construct a general model to be able to deal with them comprehensively, and consider and examine how these levels’ acceptability for human society from the perspective of the legal system.

I-B Experiment and Verification with Robot (Yuji Kawai, Osaka University)

We implement a model that can modify its behavior rules through human-robot interaction, based on the overall results of this project. For each level of robot autonomy, we investigate impressions about the confronted robot and subjective responsibility attribution.

II. Legal Liability of Artificial Intelligence (Tatsuhiko Inatani, Kyoto University)

First, we review the potential legal responsibility that might be imposed on designers, developers, holders, occupiers, users, etc. of artificial intelligence under existing legal theories and ethics. Then, we critically analyze philosophical presuppositions of existing legal theories and ethics, which essentialize a human as a subject and a non-human as an object (humanism). Based on our probe of these presuppositions, and by applying the “morality of things” theory, we consider an alternative ethical and legal responsibility theory, which can accept artificial intelligence as a trustworthy and moral agent in the NAJIMI society. In addition, we consider the requirements as well as rights and wrongs for legal personhoods of artificial systems in consideration of legal liability.

(Requirements for Legal Personhood Group (group leader: Konatsu Nishigai) had been dissolved since September 2018. See here for the past groups)